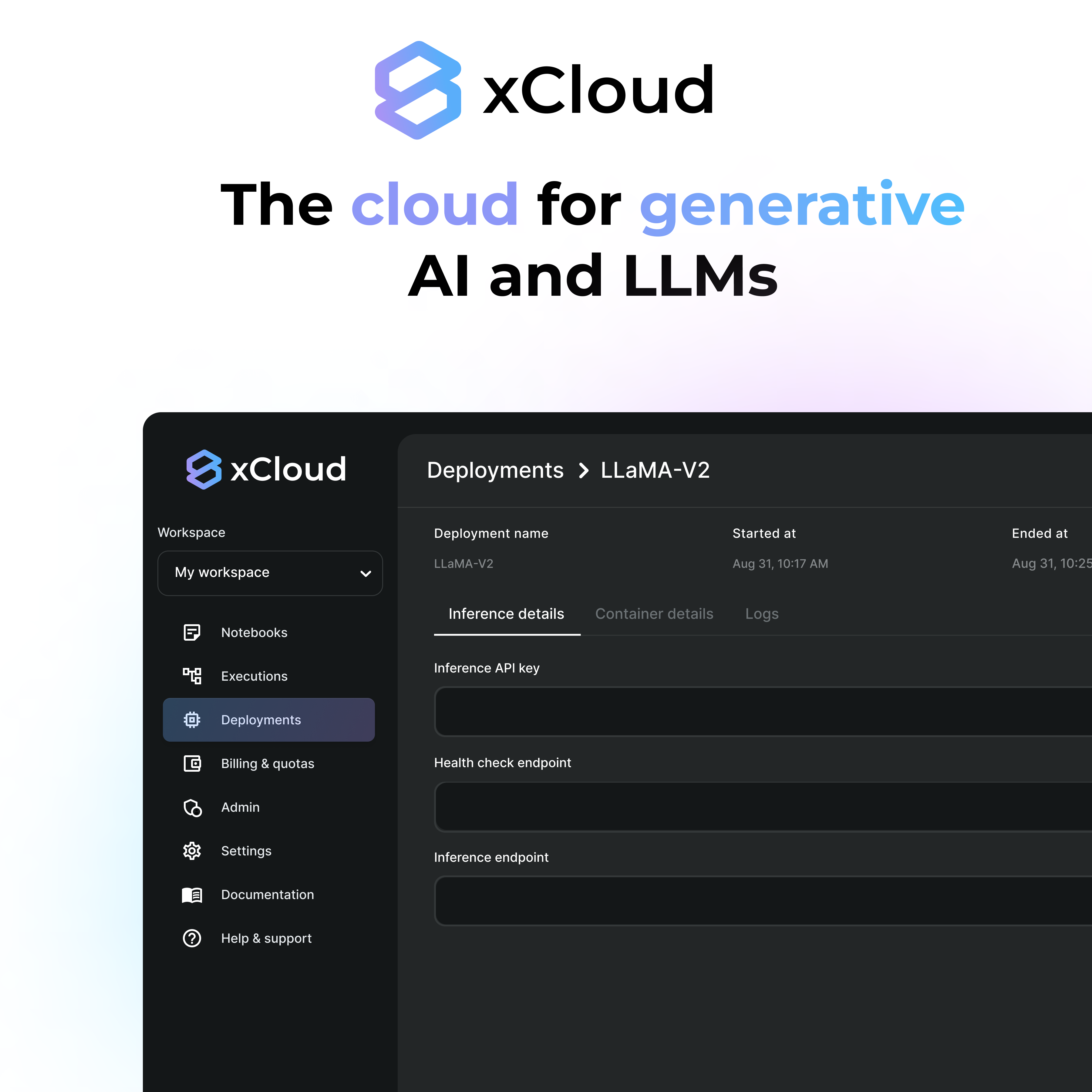

We are thrilled to introduce xCloud, a cloud-native platform designed for experimentation, fine-tuning, and deployment of Large Language Models (LLMs). xCloud leverages the latest advancements in deep learning to provide accelerated performance and resource optimization, all while maintaining exceptional quality and ease of use. In this blog post, we'll delve into the key features and deployment options of xCloud, highlighting how it can revolutionize your AI development process.

Deployment options

xCloud offers three flexible deployment options to suit your specific needs:

1. In Stochastic's Cloud: Harness the power of xCloud on Stochastic's cloud infrastructure, providing you with a seamless and convenient environment for LLM development and deployment.

2. In Your Virtual Private Cloud (VPC): xCloud is compatible with popular cloud providers like Google Cloud Platform (GCP), Amazon Web Services (AWS), and Microsoft Azure. Deploy xCloud in your VPC on any of these platforms to maintain control over your cloud resources.

3. On-Premises with Kubernetes Cluster: For organizations with strict on-premises requirements, xCloud can be deployed on your Kubernetes cluster, offering the same exceptional capabilities as the cloud-based versions.

Key Features of xCloud

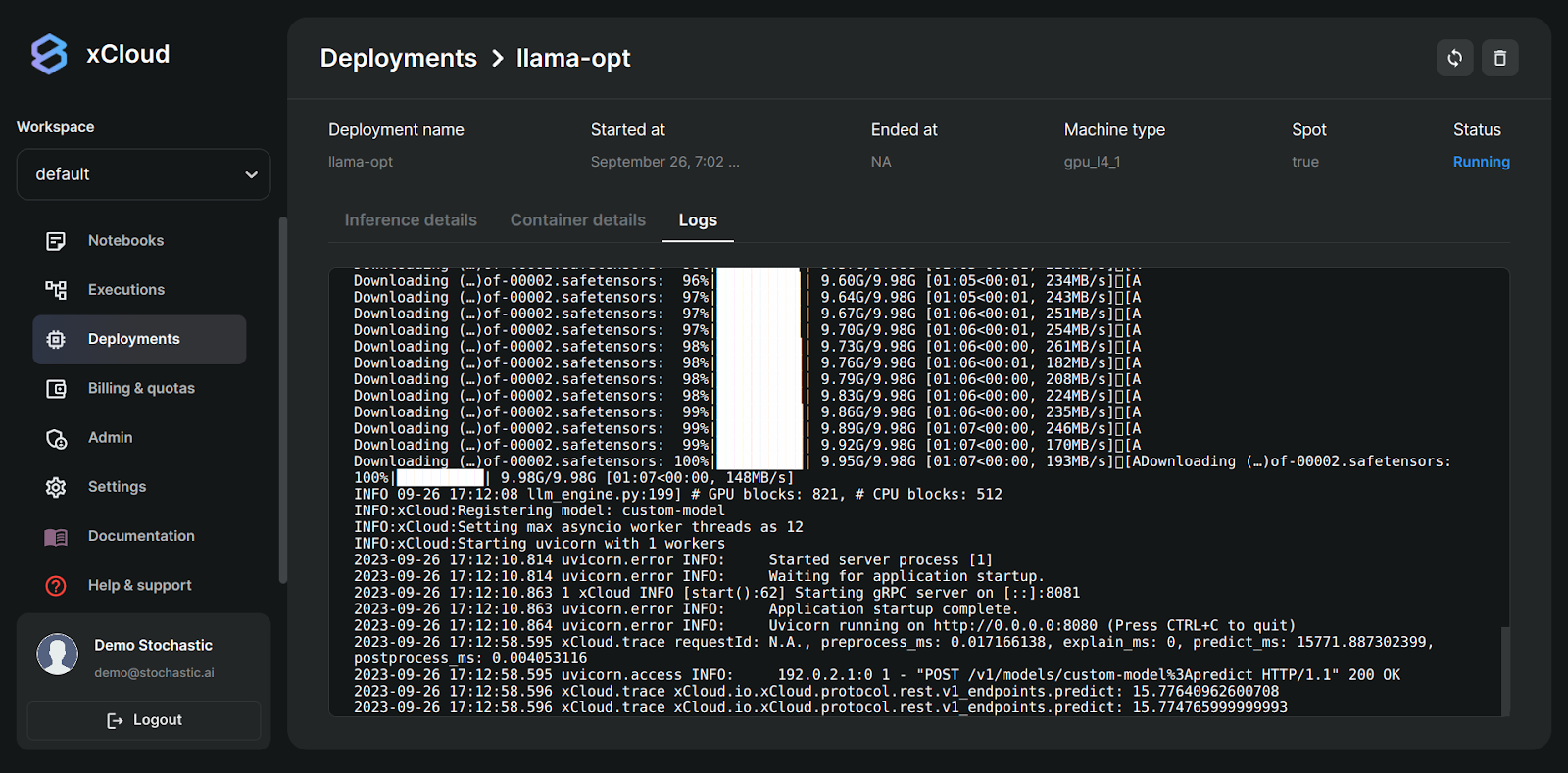

Model APIs: One of the standout features of xCloud is its Model APIs, which allow you to fine-tune LLMs effortlessly. You can provide a dataset containing prompts and outputs, select a base model like LLaMA 2 70B, and use xCloud's optimized inference service for deployment.

Accelerated Inference: Compared to standard LLM hosting, xCloud delivers exceptional performance with a 3x reduction in latency and 30x cost savings while enhancing throughput. It is ready for production environments, featuring auto-scaling, dynamic batching, fault recovery, and robust monitoring. Additionally, xCloud allows you to scale down deployments to zero during periods of inactivity, resulting in additional cost savings.

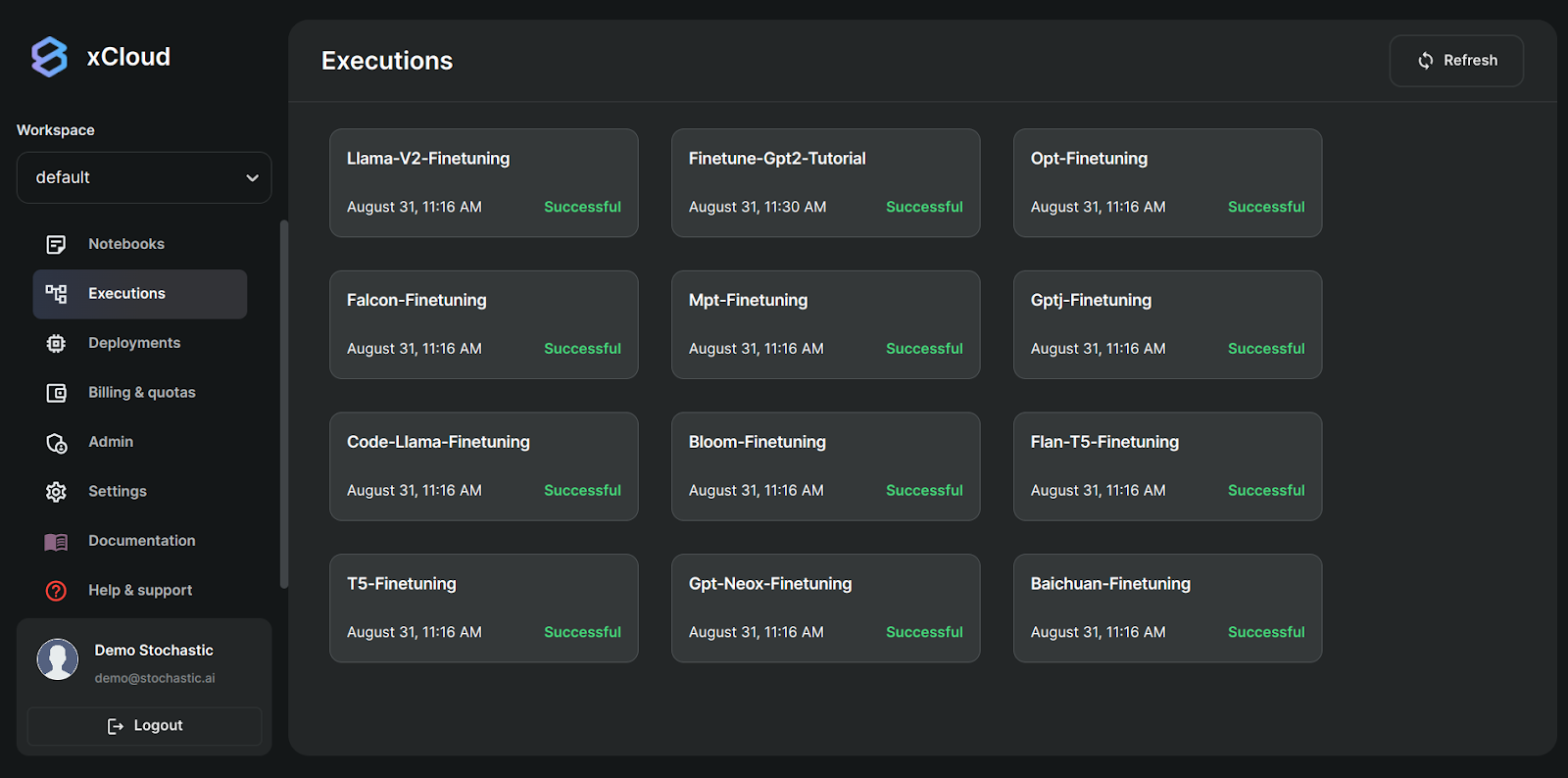

Fine-Tuning Flexibility: With xCloud, fine-tuning LLMs is a breeze. You can use your favorite framework, such as PyTorch or TensorFlow, and even write custom code to fine-tune models. The platform isn't limited to fine-tuning; you can also run data processing jobs, evaluation pipelines, and more.

Jupyter Notebooks: xCloud offers Jupyter Notebooks, providing a dynamic and collaborative environment for faster model development, experimentation, easier debugging, and seamless documentation. This feature significantly enhances your overall development experience.

Performance Benchmarks: Evaluate your endpoints with ease to ensure they meet your performance expectations in terms of latency and throughput. xCloud's benchmarking capabilities allow you to simulate concurrent users utilizing the model simultaneously.

Multiple Workspaces: Collaborate effectively with your team by setting up different workspaces within xCloud. This feature allows you to isolate different projects and streamline collaboration with team members.

Explore xCloud Today

Ready to experience the future of Generative AI development? You can get started with xCloud today by exploring the following links:

Documentation: Dive deep into xCloud's features and capabilities through our comprehensive documentation.

Landing Page: Learn more about xCloud and its offerings by visiting our landing page.

Application: Start using xCloud right away by logging in to our application.

xCloud is set to redefine the way you work with Large Language Models, making it easier, faster, and more cost-effective than ever before. Join us on this exciting journey into the world of Generative AI with xCloud and get free!

For a limited time, we are offering free fine-tuning for your use-cases.